OpenShift and a Raspi NFS Gluster Cluster

Problem

The following describes the process of setting up OpenShift to use a NFS-based storage class backed by a Gluster cluster on Raspberry Pi for dynamic provisioning of K8s Persistent Volumes via Persistent Volume Claims.

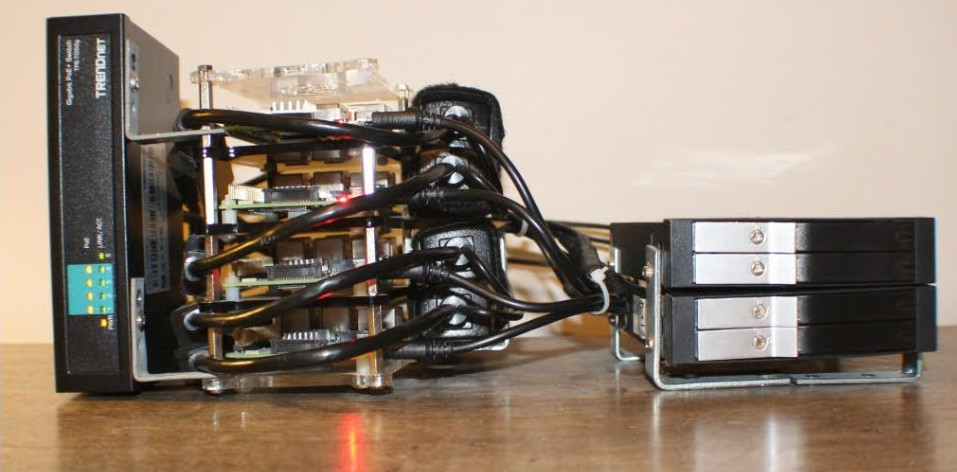

Hardware - Raspberry Pi Cluster

Solution

Install the operating system on all Raspberry Pi nodes in the cluster. We’ll be using Fedora 35 Server.

- Download Fedora ARM image.

-

Prepare the SD card, manually.

xzcat ./Fedora-Server-35-x.x.aarch64.raw.xz | dd status=progress bs=4M of=/dev/sdX - Boot OS - setup authentication and network connection.

- Resize after initial-setup (optional).

Format, check, and mount external physical drives for all nodes in the cluster. To simplify disk management, use LVM.

-

Create a Physical Volume (PV).

pvcreate /dev/sdX -

Create a Volume Group (VG).

vgcreate gluster /dev/sdX -

Create a Logical Volume (LV).

lvcreate -n save -l 100%FREE gluster -

Format the LV.

mkfs.xfs /dev/gluster/save -

Create a target directory for mounting the LV.

mkdir -p /srv/gnfs -

Backup

fstab.cp /etc/fstab "/etc/fstab.$(date +"%Y-%m-%d")" -

Modifying

fstabto mount the filesystem during boot.vim /etc/fstab # fstab .... /dev/gluster/save /srv/gnfs xfs defaults,_netdev,nofail 0 2 -

Mount the filesystem with

mount -aand verify usingdf -hT.

Install GlusterFS Server on all nodes in the cluster.

dnf -y install glusterfs-server

systemctl enable --now glusterd

gluster --version

If firewalld is running, allow GlusterFS service on all nodes.

firewall-cmd --add-service=glusterfs --permanent

firewall-cmd --reload

Create a directory for GlusterFS volume on all nodes.

mkdir -p /srv/gnfs/dstb

Configure clustering on a node (use any node).

-

Probe nodes.

[root@node03 ~]# gluster peer probe node01 [root@node03 ~]# gluster peer probe node02 [root@node03 ~]# gluster peer probe node03 (primary, will have nfs-ganesha) [root@node03 ~]# gluster peer probe node04 -

Confirm status.

[root@node03 ~]# gluster peer status -

Create volume.

[root@node03 ~]# gluster volume create vol_gnfs_dstb replica 4 transport tcp \ node03:/srv/gnfs/dstb \ node01:/srv/gnfs/dstb \ node02:/srv/gnfs/dstb \ node04:/srv/gnfs/dstb -

Start volume.

[root@node03 ~]# gluster volume start vol_gnfs_dstb -

Confirm volume info.

[root@node03 ~]# gluster volume info

Install NFS-Ganesha on the primary node.

[root@node03 ~]# dnf -y install nfs-ganesha-gluster

Export GlusterFS volume as NFS.

-

Backup Ganesha config.

[root@node03 ~]# cp /etc/ganesha/ganesha.conf "/etc/ganesha/ganesha.conf.$(date +"%Y-%m-%d")" -

Modify Ganesha config, see exporting GlusterFS volume via NFS-Ganesha or example export config for options documentation.

[root@node03 ~]# vim /etc/ganesha/ganesha.conf # ganesha.conf ... NFS_CORE_PARAM { ## Allow NFSv3 to mount paths with the Pseudo path, the same as NFSv4, ## instead of using the physical paths. #mount_path_pseudo = true; ## Configure the protocols that Ganesha will listen for. This is a hard ## limit, as this list determines which sockets are opened. This list ## can be restricted per export, but cannot be expanded. #Protocols = 3,4,9P; #Use supplied name other tha IP In NSM operations NSM_Use_Caller_Name = true; #Copy lock states into "/var/lib/nfs/ganesha" dir Clustered = false; #Use a non-privileged port for RQuota Rquota_Port = 875; NFS_Port = 2049; MNT_Port = 20048; NLM_Port = 38468; } ... EXPORT { ## Export Id (mandatory, each EXPORT must have a unique Export_Id) Export_Id = 1; ## Exported path (mandatory) Path = "/mnt/gnfs"; ## Pseudo Path (required for NFSv4 or if mount_path_pseudo = true) Pseudo = "/mnt/gnfs"; ## Restrict the protocols that may use this export. This cannot allow ## access that is denied in NFS_CORE_PARAM. Protocols = "3","4"; ## Access type for clients. Default is None, so some access must be ## given. It can be here, in the EXPORT_DEFAULTS, or in a CLIENT block Access_type = RW; ## Whether to squash various users. Squash = No_root_squash; ## Allowed security types for this export SecType = "sys"; ## Exporting FSAL FSAL { name = GLUSTER; hostname = "localhost"; volume = "vol_gnfs_dstb"; } Transports = "UDP","TCP"; # Transport protocols supported Disable_ACL = TRUE; # To enable/disable ACL } ... -

Start and enable NFS-Ganesha.

[root@node03 ~]# systemctl enable --now nfs-ganesha -

If

firewalldis running, allow NFS services.[root@node03 ~]# firewall-cmd --add-service=nfs --permanent [root@node03 ~]# firewall-cmd --add-service=mountd --permanent [root@node03 ~]# firewall-cmd --add-service=rpc-bind --permanent [root@node03 ~]# firewall-cmd --reload -

Verify sockets are listening and the NFS share has been mounted.

[root@node03 ~]# ss -nltupe | grep -E ':2049|:20048|:111' [root@node03 ~]# showmount -e localhost

Configure OpenShift to use K8s NFS Subdir External Provisioner as default storage class.

-

Clone the git repo and change directory.

git clone https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner.git cd nfs-subdir-external-provisioner -

Replace the namespace parameter and backup the

rbac.yamlfile.sed -i.backup 's/namespace:.*/namespace: nfs-storage/g' ./deploy/rbac.yaml -

Replace the namespace parameter and backup the

deployment.yamlfile.sed -i.backup 's/namespace:.*/namespace: nfs-storage/g' ./deploy/deployment.yaml -

Modify the

deployment.yamlfile.vim ./deploy/deployment.yaml # deployment.yaml ... - name: NFS_SERVER value: NFS.GANESHA.IP.ADDRESS (primary, will have nfs-ganesha) - name: NFS_PATH value: /mnt/gnfs volumes: - name: nfs-client-root nfs: server: NFS.GANESHA.IP.ADDRESS (primary, will have nfs-ganesha) path: /mnt/gnfs ... -

Backup the

class.yamlfile.cp -v ./deploy/class.yaml ./deploy/class.yaml.backup -

Modify the

class.yamlfile.vim ./deploy/class.yaml # class.yaml ... metadata: annotations: storageclass.kubernetes.io/is-default-class: 'true' name: managed-nfs-storage ...

Perform the following commands as the OpenShift cluster admin.

-

Create a new OpenShift project.

oc new-project nfs-storage -

Deploy the NFS subdir external provisioner.

oc create -f ./deploy/rbac.yaml oc adm policy add-scc-to-user hostmount-anyuid system:serviceaccount:nfs-storage:nfs-client-provisioner oc create -f ./deploy/deployment.yaml oc create -f ./deploy/class.yaml

Summary

Congratulations! You’ve successfully created a NFS-based storage class on OpenShift that is backed by a Gluster cluster on Raspberry Pi.